About Me

Postdoctoral Fellow in the Leinweber Institute for Theoretical Physics (LITP), University of Michigan -- Ann Arbor.

I'm a prize fellow at the Leinweber Institute for Theoretical Physics (LITP), University of Michigan, where I work on small-scale Lyman alpha forest cosmology, machine learning and uncertainty quantification (UQ). Theorist by training, I work on the intersection between data and simulations, but I guess I ended up thinking more data than simulations these days.

Current Research Focus

- Lyα P1D Cosmology with Emulators: Using ML-driven emulation with a suite of hydrodynamical simulations to get cosmological constraints from Lyα forest, probing the underlying dark matter density field at ~1 - 10 h/Mpc scales.

- Multi-Fidelity Emulation & UQ: Developing multi-fidelity models to approximate expensive simulations while keeping uncertainties in check. This lets us explore astrophysical models without spending a lifetime running full N-body simulations.

- DESI (Lyα & DLA Science): Improving the DLA detection pipeline, trying to figure out how to detect them better, statistically.

Background

I did my PhD at UC Riverside with Simeon Bird, working on multi-fidelity ML for cosmology simulations and receiving a NASA FINESST fellowship. During that time, I also maintained and improved the Gaussian Process-based DLA model (with Roman Garnett), which is now part of DESI's DLA pipeline.

I also worked on Bayesian hierarchical inference for gravitational waves (with the LLNL PBH collaboration led by Will Dawson), studying the formation of binary black holes.

Currently, I also work on photometric redshift UQ for LSST (with Camille Avestruz), making sure that when LSST measures billions of galaxy redshifts, we actually trust the results.

Animations-you-might-like

I believe a video is worth a million words. So instead of writing lots of words, I made some videos. There are ~6 million words below :)

(Background image: Gas particles in Astrid simulation model (120 cMpc/h).)

(Demo: Multi-fidelity emulation in 1D toy data (See jibanCat/nargp_tensorflow).)

(Demo: Bayesian optimization demo, with an increasing observational noise.)

(Demo: Inferring quasar redshift using a data-driven Gaussian process (2006.07343).)

(Demo: Inferring damped Lyman alphas absorption (DLAs, typically neutral hydrogen gas around high-z dwarf galaxies) using Gaussian processes and Bayesian model selection (2003.11036).)

MF-Box: Multi-fidelity and multi-scale emulation

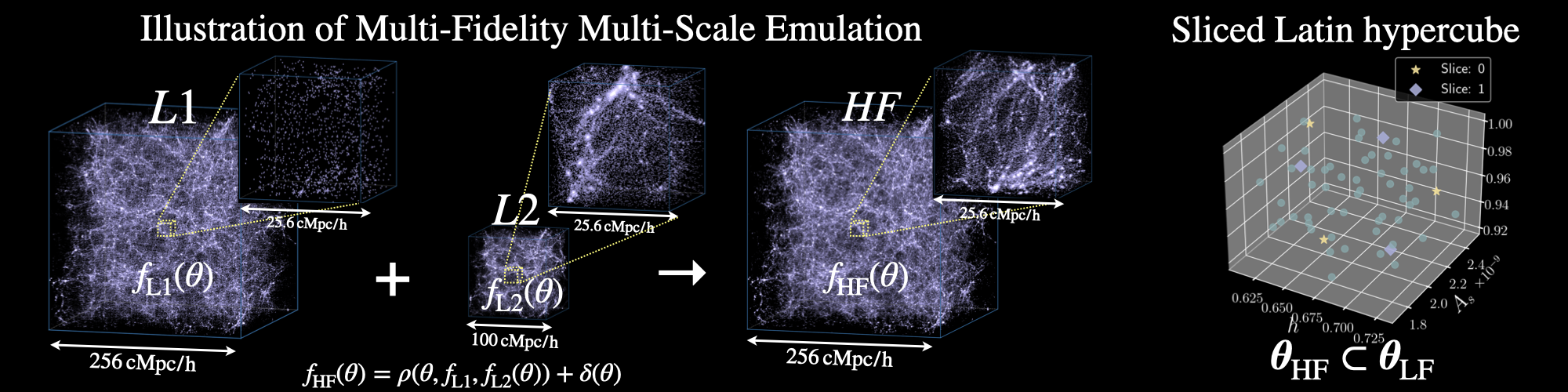

We have extended the idea of multi-fidelity modeling and developed MF-Box (arXiv:2306.03144), a method for modeling N-body simulations that incorporates multiple particle loads and physical scales. By using two types of cost-effective simulations, L1 and L2, we construct a model that accurately predicts summary statistics derived from the high-fidelity simulation across a wide range of cosmological parameter space. L1 simulates a low particle load in a large volume, while L2 simulates a low particle load in a small volume. By combining the simulations from different volumes, we achieve precise interpolation in a high-dimensional parameter space across scales, significantly reducing computational costs compared to running an additional high-fidelity simulation. MF-Box is versatile and can be applied to various simulation suites, providing an effective solution for interpolating simulations across multiple physical scales using affordable approximations.

Gaussian process DLA finder

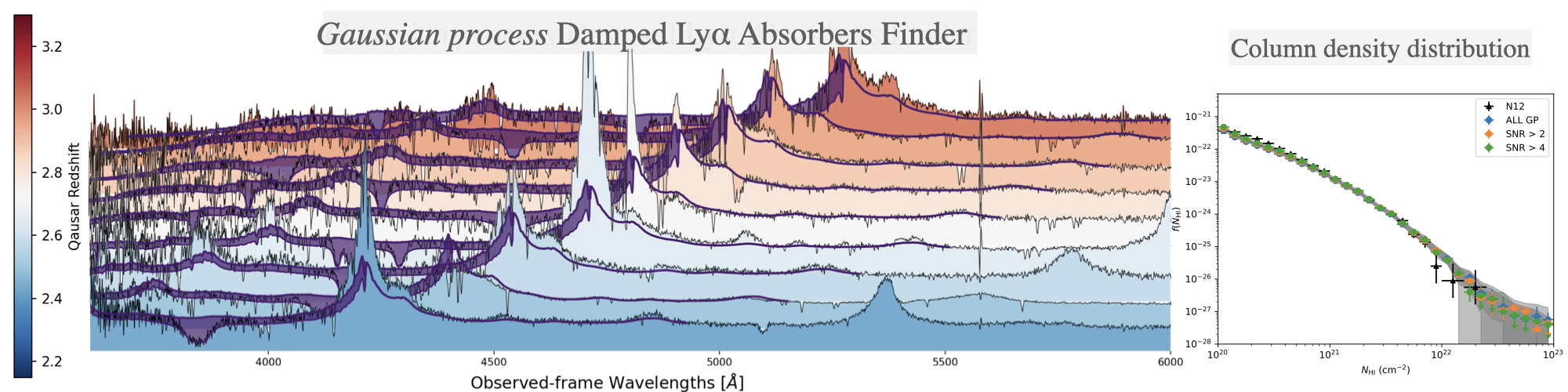

I have an interest in using machine learning techniques to model the emissions of quasars, which are the lights emitted by supermassive black holes. I have made improvements to a Gaussian process (GP) based model originally developed by Roman Garnett (arXiv:1605.04460). My modifications in Ho-Bird-Garnett (2021) allow for the identification of damped Lyman alpha absorbers (DLAs) in the intergalactic medium, accounting for sub-DLAs and also include a model for a quasar meanflux model (arXiv:2003.11036, arXiv:2103.10964). Furthermore, our research involves performing probabilistic inference on quasar redshifts using the Gaussian process based model, as detailed in the work by Fauber et al. (2020) (arXiv:2006.07343).

(Thanks Yongda Zhu for sharing the awesome XQR-30 image made by Dr. Anna-Christina Eilers, which I used it as a reference to make the above DLA figure.)

Multi-fidelity emulation for cosmological simulations

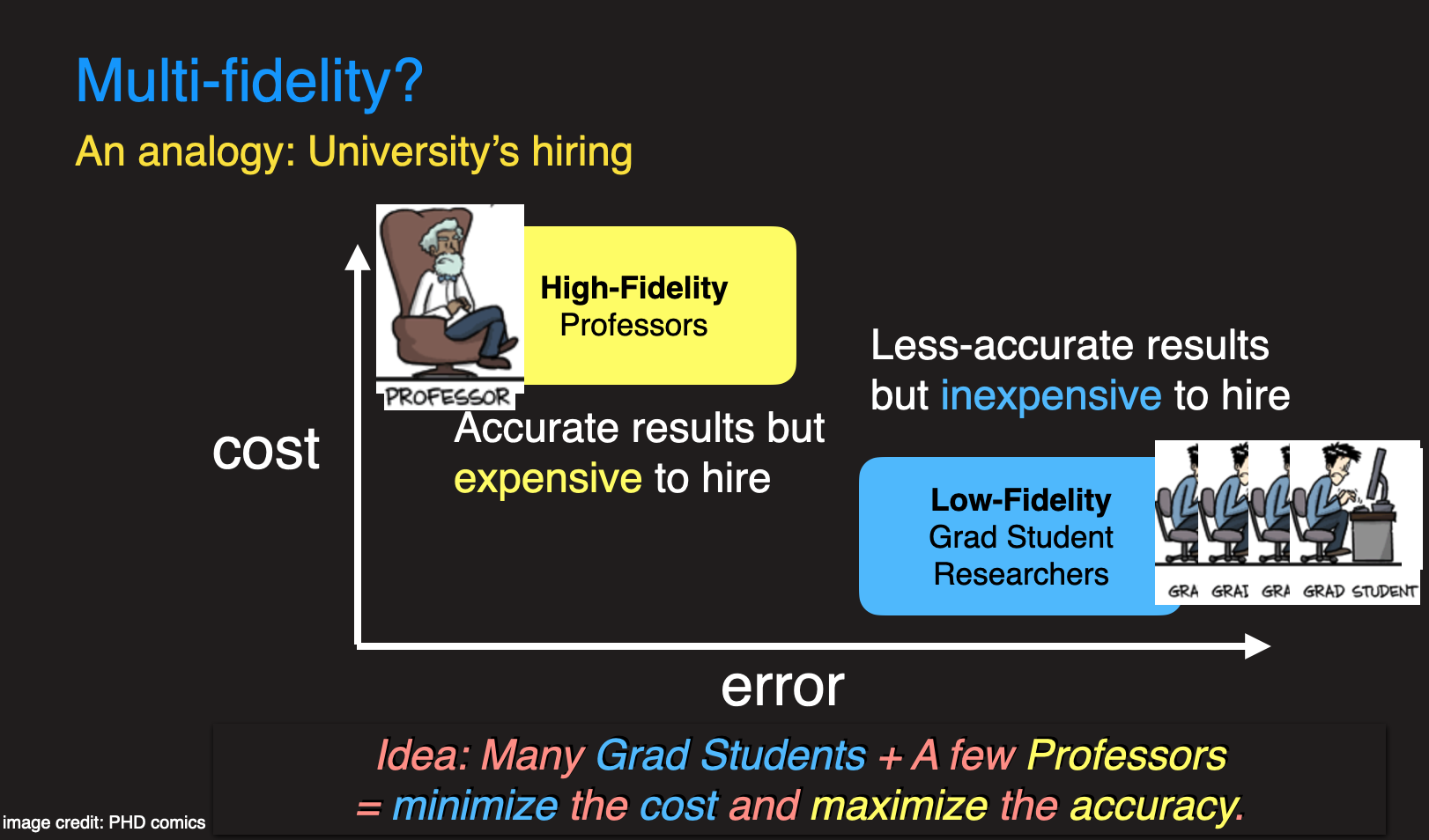

The concept of multi-fidelity modeling is straightforward. We employ many inexpensive approximations to explore different parameter settings, and only rely on a handful of costly examples to improve the accuracy of those approximations. To illustrate this, consider the hiring process at a university. We have numerous graduate student workers who tackle the groundwork of exploring various research topics, while only a few professors are needed to provide guidance and refine the outputs of the students. You can find more details in the paper by Ho-Bird-Shelton (2021) available at arXiv:2105.01081. Recently, we have applied the multi-fidelity technique to emulate Lyman-alpha forest, see Fernandez-Ho-Bird (2022) arXiv:2207.06445. As part of my FINESST grant, I will continue utilizing multi-fidelity methods to model halo mass functions and weak lensing statistics.

Motivation

My research is driven by my passion to ease the workload in academia. Over time, I have noticed many people becoming discouraged with research because they were told that spending long hours on tedious tasks was the only way to produce results. However, I strongly believe that we can use automated tools and better models to replace repetitive work, allowing academia to focus more on creative thinking.